(Part One)

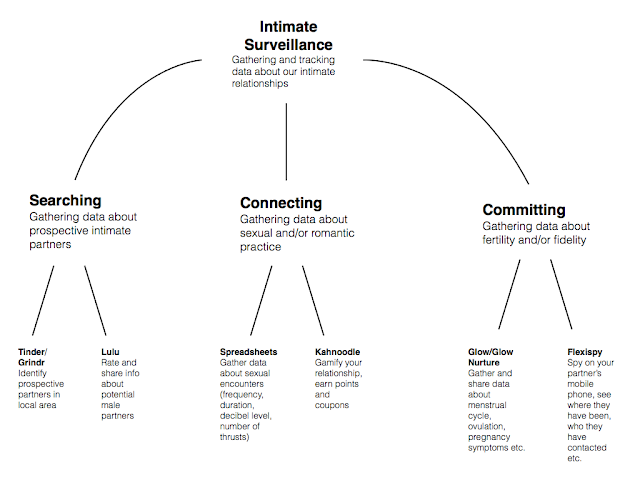

This is the second in a two-part series looking at the ethics of intimate surveillance. In part one, I explained what was meant by the term ‘intimate surveillance’, gave some examples of digital technologies that facilitate intimate surveillance, and looked at what I take to be the major argument in favour of this practice (the argument from autonomy).

To briefly recap, intimate surveillance is the practice of gathering and tracking data about one’s intimate life, i.e. information about prospective intimate partners, information about sexual and romantic behaviours, information about fertility and pregnancy, and information about what your intimate partner is up to. There are a plethora of apps allowing for such intimate surveillance. What’s interesting about them is how they not only facilitate top-down surveillance (i.e. surveillance by app makers, corporate agents and governments) but also interpersonal and self-surveillance. This suggests that a major reason why people make use of these services is to attain more control and mastery over their intimate lives.

I introduced some criticisms of intimate surveillance at the end of the previous post. In this post, I want to continue that critical mode by reviewing several arguments against their use. The plausibility of these arguments will vary depending on the nature of app or service being used. I’m not going to go into the nitty gritty here. I want to survey the landscape of arguments, offering some formalisations of commonly-voiced objections along with some critical evaluation. I’m hoping that this exercise will prove useful to others who are researching in the area. Again, the main source and inspiration for this post is Karen Levy’s article ‘Intimate Surveillance’.

1. Arguments from Biased Data

All forms of intimate surveillance depend on the existence of data that can be captured, measured and tracked. Is it possible to know the ages and sexual preferences of all the women/men within a 2 mile radius? Services like Tinder and Grindr make this possible. But what if you wanted to know what they ate today or how many steps they have walked? Technically this data could be gathered and shared via the same services, but at present it is not.

This dependency of these services on data that is and can be captured, measured and tracked creates problems. What if the data that is being gathered is not particularly useful? What if it is biased in some way? What if it contributes to some form of social oppression? There are at least three objections to intimate surveillance that play upon this theme.

The first rests on a version of the old adage ‘what gets measured gets managed’. If data is being gathered and tracked, it becomes more salient to people and they start to manage their behaviour so as to optimise the measurements. But if the measurements being provided are no good (or biased) then this may thwart preferred outcomes. For example, mutual satisfaction is a key part of any intimate relationship: it’s not all about you and what you want; it’s about working together with some else to achieve a mutually satisfactory outcome. One danger of intimate surveillance is that it could get one of the partners to focus on behaviours that do not contribute to mutually satisfactory outcomes. In general terms:

- (1) What gets measured gets managed, i.e. if people can gather and track certain forms of data they will tend to act so as to optimise patterns in that data.

- (2) In the case of intimate surveillance, if the optimisation of the data being gathered does not contribute to mutual satisfaction, it will not improve our intimate lives.

- (3) The optimisation of the data being gathered by some intimate surveillance apps does not contribute to mutual satisfaction.

- (4) Therefore, use of those intimate surveillance apps will not improve our intimate lives.

Premise (1) here is an assumption about how humans behave. Premise (2) is the ethical principle. It says that mutual satisfaction is key to a healthy intimate life and anything that thwarts that should be avoided (assuming we want a healthy intimate life). Premise (3) is the empirical claim, one that will vary depending on the service in question. (4) is the conclusion.

Is the argument any good? There are some intimate surveillance apps that would seem to match the requirements of premise (3). Levy gives the example of Spreadsheets — the sex tacker app that I mentioned in part one. This app allows users to collect data about the frequency, duration, number of thrusts and decibel level reached during sexual activity. Presumably, with the data gathered, users are likely to optimise these metrics, i.e. have more frequent, longer-lasting, more thrusting and decibel-raising sexual encounters. While this might do it for some people, the optimisation of these metrics is unlikely to be a good way to ensure mutual satisfaction. The app might get people to focus on the wrong thing.

I think the argument in the case of Spreadsheets might be persuasive, but I would make two comments about this style of argument more generally. First, I’m not sure that the behavioural assumption always holds. Some people are motivated to optimise their metrics; some aren’t. I have lots of devices that track the number of steps I walk, or miles I run. I have experimented with them occasionally, but I’ve never become consumed with the goal of optimising the metrics they provide. In other words, how successful these apps actually are at changing behaviour is up for debate. Second, premise (3) tends to presume incomplete or imperfect data. Some people think that as the network of data gathering devices grows, and as they become more sensitive to different types of information, the problem of biased or incomplete data will disappear. But this might not happen anytime soon and even if it does there remains the problem of finding some way to optimise across the full range of relevant data.

Another argument against intimate surveillance focuses on gender-based inequality and oppression. Many intimate surveillance apps collect and track information about women (e.g. the dating apps that locate women in a geographical region, the spying apps that focus on cheating wives, and the various fertility trackers that provide information about women’s menstrual cycles and associated moods). These apps may contribute to social oppression in at least two ways. First, the data being gathered may be premised upon and contribute to harmful, stereotypical views of women and how they relate to men (e.g. the ‘slutty’ college girl, the moody hormonal woman, the cheating wife and her cuckolded husband etc.). Second, and more generally, they may contribute to the view that women are subjects that can be (and should be) monitored and controlled through surveillance technologies. To put it more formally:

- (5) If something contributes to or reinforces harmful gender stereotypes, or contributes to and reinforces the view that women can be and should be monitored and controlled, it is bad.

- (6) Some intimate surveillance apps contribute to or reinforce harmful gender stereotypes and support the view that women can and should be monitored and controlled.

- (7) Therefore, some intimate surveillance apps are bad.

This is a deliberately vague argument. It is similar to many arguments about gender-based oppression insofar as it draws attention to the symbolic properties of a particular practice and then suggests that these properties contribute to or reinforce gender-based oppression. I’ve looked at similar arguments in relation to prostitution, sex robots and surrogacy in the past. One tricky aspect of any such argument is proving the causal link between the symbolic practice (in this case the data being gathered and organised about women) and gender-based oppression more generally. Empirical evidence is often difficult to gather or inconclusive. This leads people to fall back on purely symbolic arguments or to offer revised views of what causation might mean in this context. A final problem with the argument is that even if it is successful it’s not clear what it’s implications are. Could the badness of the oppression be offset by other gains (e.g. what if the fertility apps really do enhance women’s reproductive autonomy)?

The third argument in this particular group is a little bit more esoteric. Levy points in its direction with a quote from Deborah Lupton:

These technologies configure a certain type of approach to understanding and experiencing one’s body, an algorithmic subjectivity, in which the body and its health states, functions and activities are portrayed and understood predominantly via quantified calculations, predictions and comparisons.

(Lupton 2015, 449)

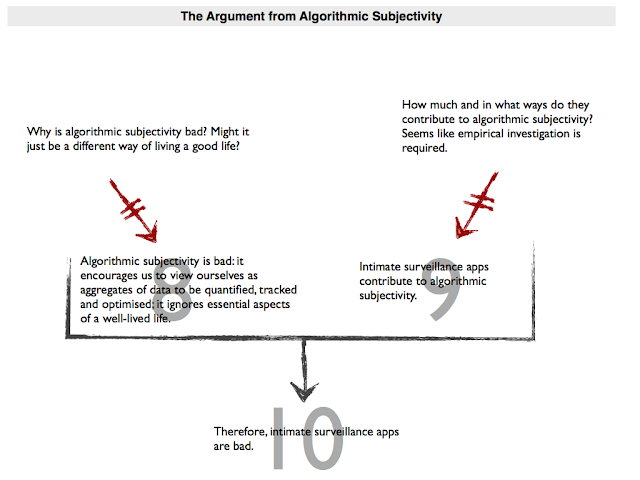

The objection that derives from this stems from a concern about algorithmic subjectivity. I have seen it expressed by several others. The concern is always that the apps encourage us to view ourselves as aggregates of data (to be optimised etc). Why this is problematic is never fully spelled out. I think it is because this form of algorithmic subjectivity is dehumanising and misses out on something essential to the well-lived human life (the unmeasurable, unpredictable, unquantifiable):

- (8) Algorithmic subjectivity is bad: it encourages us to view ourselves as aggregates of data to be quantified, tracked and optimised; it ignores essential aspects of a well-lived life.

- (9) Intimate surveillance apps contribute to algorithmic subjectivity.

- (10) Therefore, intimate surveillance apps are bad.

This strikes me as a potentially very rich argument — one worthy of deeper reflection and consideration. I have mixed feelings about it. It seems plausible to suggest that intimate surveillance contributes to algorithmic subjectivity (though how much and in what ways will require empirical investigation). I’m less sure about whether algorithmic subjectivity is a bad thing. It might be bad if the data being gathered is biased or distorting. But I’m also inclined to think that there are many ways to live a good and fulfilling life. Algorithmic subjectivity might just be different; not bad.

2. Arguments from Core Relationship Values

Another group of objections to intimate surveillance are concerned with its impact on relationships. The idea is that there are certain core values associated with any healthy relationship and that intimate surveillance tends to corrupt or undermine those values. I’ll look at two such objections here: the argument from mutual trust; and the argument from informal reciprocal altruism (or solidarity).

Before I do so, however, I would like to voice a general concern about this style of argument. I’m sceptical of essentialistic approaches to healthy relationships, i.e. approaches to healthy relationships that assume they must have certain core features. There are a few reasons for this, but most of them flow from my sense that the contours of a healthy relationship are largely shaped by the individuals that are party to that relationship. I certainly think it is important for the parties to the relationship to respect one another’s autonomy and to ensure that there is informed consent, but beyond that I think people can make all sorts of different relationships work. The other major issue I have is that I’m not sure what a healthy relationship really is. Is it one that lasts indefinitely? Can you have a healthy on-again off-again relationship? Abuse and maltreatment are definite no-gos, but beyond that I’m not sure what makes things work.

Setting that general concern to the side, let’s look at the argument from mutual trust. It works something like this:

- (11) A central virtue of any healthy relationship is mutual trust, i.e. a willingness to trust that your partner will act in a way that is consistent with your interests and needs without having to monitor and control them.

- (12) Intimate surveillance undermines mutual trust.

- (13) Therefore, intimate surveillance prevents you from having a healthy relationship.

The support for (12) is straightforward enough. There are certain apps that allow you to spy on your partner’s smartphone: see who they have been texting/calling, where they have been, and so on. If you use these apps, you are clearly demonstrating that you are unwilling to trust your partner without monitoring and control. So you are clearly undermining mutual trust.

I agree with this argument up to a point. If I spy on my partner’s phone without her consent, then I’m definitely doing something wrong: I’m failing to respect her autonomy and privacy and I’m not being mature, open and transparent. But it strikes me that there is a deeper issue here: what if she is willing to consent to my use of the spying app as gesture of her commitment? Would it still be a bad idea to use it? I’m less convinced. To argue the affirmative you would need to show that having (blind?) faith in your partner is essential to a healthy relationship. You would also have to contend with the fact that mutual trust may be too demanding, and that petty jealousy is all too common. Maybe it would be good to have a ‘lesser evil’ option?

The other argument against intimate surveillance is the argument from informal reciprocal altruism (or solidarity). This is a bit of a mouthful. The idea is that relationships are partly about sharing and distributing resources. At the centre of any relationship there are two (or more) people who get together and share income, time, manual labour, emotional labour and so on. But what principle do people use to share these resources? Based on my own anecdotal experience, I reckon people adopt a type of informal reciprocal altruism. They effectively agree that if one of them does something for the other, then the other will do something else in return, but no one really keeps score to make sure that every altruistic gesture is matched with an equal and opposing altruistic gesture. They know that it is part of their commitment to one another that it will all pretty much balance out in the end. They think: “we are in this together and we’ve got each other’s backs”.

This provides the basis for the following argument:

- (14) A central virtue of any healthy relationship is that resources are shared between the partners on the basis of informal reciprocal altruism (i.e. the partners do things for one another but don’t keep score as to who owes what to whom)

- (15) Intimate surveillance undermines informal reciprocal altruism.

- (16) Therefore, intimate surveillance prevents you from having a healthy relationship.

The support for (15) comes from the example of apps that try to gamify relationships by tracking data about who did what for whom, assigning points to these actions, and then creating an exchange system whereby one partner can cash in these points for favours by the other partner. The concern is that this creates a formal exchange mentality within a relationship. Every time you do the laundry for your partner you expect them to do something equivalently generous and burdensome in return. If they don’t, you will feel aggrieved and will try to enforce their obligation to reciprocate.

I find this objection somewhat appealing. I certainly don’t like the idea of keeping track of who owes what to whom in a relationship. If I pay for the cinema tickets, I don’t automatically expect my partner to pay for the popcorn (though we may often end up doing this). But there are some countervailing considerations. Many relationships are characterised by inequalities of bargaining power (typically gendered): one party end’s up doing the lions share of care work (say). Formal tracking and measuring of actions might help to redress this inequality. It could also save people from emotional anguish and feelings of injustice. Furthermore, some people seem to make formal exchanges of this sort work. The creators of the Beeminder app, for instance, appear to have a fascinating approach to their relationship.

3. Privacy-related Objections

The final set of objections returns the debate to familiar territory: privacy. Intimate surveillance may involve both top-down and horiztonal privacy harms. That is to say, privacy harms due to the fact that corporations (and maybe governments) have access to the data being captured by the relevant technologies; and privacy harms to due to the fact that one’s potential and actual partners have access to the data.

I don’t have too much to say about privacy-related objections. This is because they are widely-debated in the literature on surveillance and I’m not sure that they are all that different in the debate about intimate surveillance. They all boil down to the same thing: the claim that the use of these apps violates somebody’s privacy. This is because the data is gathered and used either without the person whose data it is consenting to this gathering and use (e.g. facebook stalking), or with imperfect consent (i.e. not fully informed). It is no doubt true that this is often the case. App makers frequently package and sell the data they mine from their users: it is intrinsic to their business model. And certain apps — like the ones that allow you to spy on your partner’s phone — seem to encourage their users to violate their partner’s privacy.

The critical question then becomes: why should we be so protective of privacy? I think there are two main ways to answer this:

Privacy is intrinsic to autonomy: The idea here is that we have a right to control how we present ourselves to others (what bits get shared etc) and how others use information about us; this right is tied into autonomy more generally; and these apps routinely violate this right. This argument works no matter how the information is used (i.e. even if it is used for good). The right may need to be counterbalanced against other considerations and rights, but it is a moral harm to violate it no matter what.

Privacy is a bulwark against the moral imperfection of others: The idea here is that privacy is instrumentally useful. People often argue that if you are a morally good person you should have nothing to hide. This might be true, but it forgets that other people are not morally perfect. They may use information about you to further some morally corrupt enterprise or goal. Consequently, it’s good if we can protect people from at least some unwanted disclosures of personal information. The ‘outing’ of homosexuals is a good example of this problem. There is nothing morally wrong about being a homosexual. In a morally perfect world you should have nothing to fear from the disclosure of your sexuality. But the world isn’t morally perfect: some people in some communities persecute homosexuals. In those communities, homosexuals clearly should have the right to hide their sexuality from others. The same could apply to the data being gathered through intimate surveillance technology. While you might not being doing anything morally wrong, others could use the information gathered for morally corrupt ends.

I think both of these arguments have merit. I’m less inclined toward the view that privacy is an intrinsic good and necessarily connected to autonomy, but I do think that it provides protection against the moral imperfection of others. We should work hard to protect the users of intimate surveillance technology from the unwanted and undesirable disclosure of their personal data.

Okay, that brings me to the end of this series. I won’t summarise everything I have just said. I think the diagrams given above summarise the landscape of objections already. But have I missed something? Are there other objections to the practice of intimate surveillance? Please add suggestions in the comments section.