(I originally thought this would be a more interesting blog post, but I think the final product is slightly underwhelming. Indeed, I thought about not posting it at all. In the end, I felt there might be some value to it, particularly since there might be those who disagree with my analysis. If you are one of them, I'd love to hear from you in the comments.)

Consider the following passage from Richard Dawkins’s book Unweaving the Rainbow:

We are going to die, and that makes us the lucky ones. Most people are never going to die because they are never going to be born. The potential people who could have been here in my place but who will in fact never see the light of day outnumber the sand grains of Arabia. Certainly those unborn ghosts include greater poets than Keats, scientists greater than Newton. We know this because the set of possible people allowed by our DNA so massively exceeds the set of actual people. In the teeth of these stupefying odds it is you and I, in our ordinariness, that are here.We privileged few, who won the lottery of birth against all odds, how dare we whine at our inevitable return to that prior state from which the vast majority have never stirred?

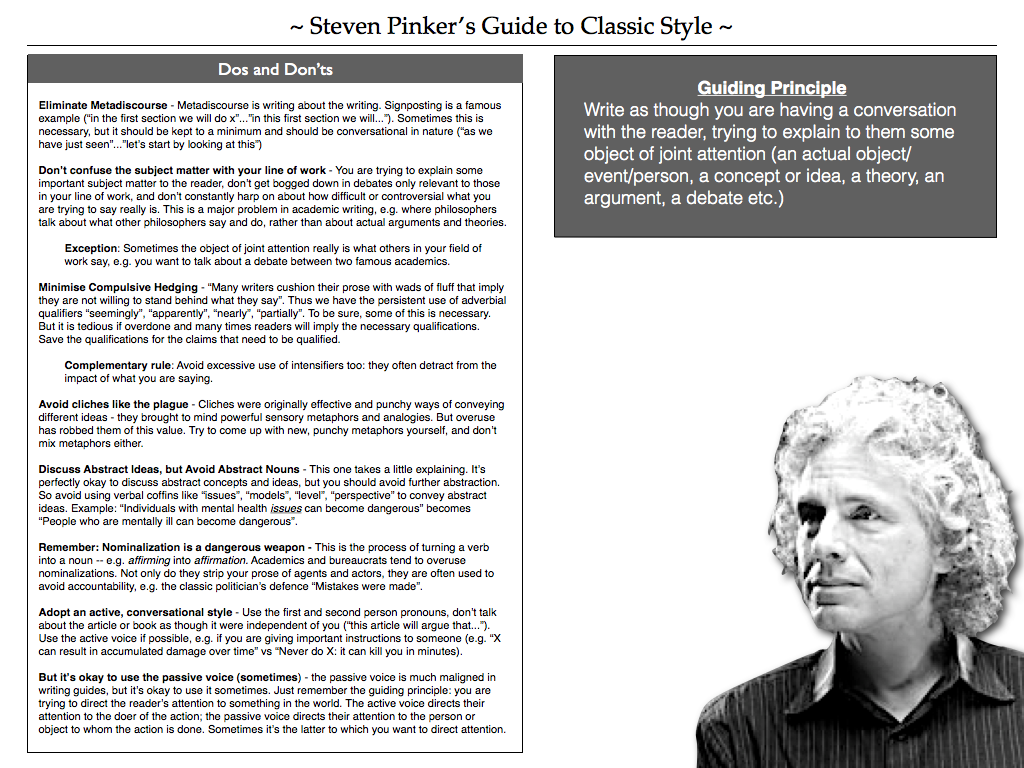

As Steven Pinker points out in his recent book, this is a rhetorically powerful passage. It is robust, punchy and replete with evocative and dramatic imagery (“the sand grains of Arabia”, “unborn ghosts”, “teeth of these stupefying odds”). Indeed, so powerful is it that many non-religious people — Dawkins included — have asked for it to be read at their funerals (click here to see Dawkins read the passage at public lecture to rapturous applause).

While I can certainly appreciate the quality of the writing, I am, alas, somewhat prone to “unweaving the rainbow” myself. If we stripped away the lyrical writing, what would we be left with? To be more precise, what kind of argument would we be left with? It seems to me that Dawkins is indeed trying to present some kind of argument: he has conclusions that he wants us to accept. Specifically, he wants us to be consoled by the fact that we are going to die; to stop whining about our deaths; to stop fearing our ultimate demise. And this is all because we are lucky to be alive. In this respect, I think that what Dawkins is doing is analogous to what the classic Epicurean writers did when they tried to soothe our death-related anxieties. But is his argument any good? That’s the question I will try to answer.

I’ll start by looking at the classic Epicurean arguments and draw out the analogy between them and what Dawkins is trying to do. Once that task is complete, I’ll try to formulate and evaluate Dawkins’s argument.

1. The Epicurean Tradition

There are two classic Epicurean arguments about death. The first comes from Epicurus himself; the second comes from Lucretius, who was a follower of Epicureanism. Epicurus’s argument is contained in the following passage:

Foolish, therefore, is the man who says that he fears death, not because it will pain when it comes, but because it pains in the prospect. Whatever causes no annoyance when it is present, causes only a groundless pain in the expectation. Death, therefore, the most awful of evils, is nothing to us, seeing that, when we are, death is not come, and, when death is come, we are not. It is nothing, then, either to the living or to the dead, for with the living it is not and the dead exist no longer

(Epicurus, Letter to Menoeceus)

The argument is all about our attitude towards death (that is: the state of being dead, not the process of dying). Most people fear death. They think it among the greatest of the evils that befall us. But Epicurus is telling us they are wrong. The only things that are good or bad are conscious pleasure and pain. Death entails the absence of both. Therefore, death is not bad and we should stop worrying about it. I’ve discussed a more complicated version of this argument before, in case you are interested, but that’s the gist of it.

Let’s turn then to Lucretius’s argument. This one comes from a passage of De Rerum Natura, which I believe is the only piece of writing we have from Lucretius:

In days of old, we felt no disquiet... So, when we shall be no more — when the union of body and spirit that engenders us has been disrupted — to us, who shall then be nothing, nothing by any hazard will happen any more at all. Look back at the eternity that passed before we were born, and mark how utterly it counts to us as nothing. This is a mirror that Nature holds up to us, in which we may see the time that shall be after we are dead.

This argument builds upon that of Epicurus by adding a supporting analogy. This analogy asks us to compare the state of non-existence prior to our births with the state of non-existence after our deaths. Since the former is not something we worry about; so too should the latter “count to us as nothing”. This is sometimes referred to as the symmetry argument. This is because it argues that we should have a symmetrical attitude toward pre-natal and post-mortem non-existence. Some people think that Lucretius adds little to what Epicurus originally argued; some people think Lucretius’s argument has its own merits. Again, this is something I discussed in more detail before.

I won’t assess the merits of either argument here. Instead, I’ll just highlight some general features. Note how both arguments try to call our attention to some “surprising fact”: the centrality of pain and pleasure to our well-being, in Epicurus’s case (this might be less radical now than it was in his day); and our attitude to pre-natal non-existence, in Lucretius’s case. Then note how they both use this surprising fact to reorient our perspective on death. They both claim that this surprising fact has the implication that we should not join the masses in fearing our deaths; instead, we should treat our deaths with equanimity.

2. Dawkins’s and the Argument from Genetic Luck

My feeling is that Dawkins is trying to do the same thing in his “We are going die”-passage. Only in Dawkins’s case the “surprising fact” has nothing to do with conscious experience or our attitudes towards non-existence prior to birth, it has to do with the improbability of our existence in the first place.

So how should we interpret this argument? Look first to the wording. Dawkins seems to be concerned with those who spend their lives ‘whining’ about death. He thinks they don’t fully appreciate the rare ‘privilege’ they have in being alive at all, particularly when they compare their ordinariness to the set of possible people who could have existed. He tells them (actually all of us) that they are the “lucky ones” because they are going to die, not in spite of it.

This suggests that we could interpret Dawkins’s argument in something like the following form:

- (1) If we are lucky to be alive, then we should not be upset by the fact that we are going to die.

- (2) We are lucky to be alive.

- (3) Therefore, we should not be upset by the fact that we are going to die.

How do the premises of this argument work? Let’s start with premise (1). The implication contained in the premise is that we should be grateful for the opportunity of being alive, even if that entails our deaths. This suggests that the argument is an argument from gratitude. He is telling us to be grateful for the rare privilege of dying. The problem I have with this is that gratitude has a somewhat uncertain place in a non-religious worldview. Gratitude is typically something we experience in our relationships with others. I am grateful to my parents for supporting me and paying for my education; I am grateful to my friends for buying me an expensive gift; and so on. If we think of our lives as being gifts from a benevolent creator, then being grateful, arguably, makes sense. But Dawkins is, famously, an atheist. So he must be relying on a different notion of gratitude. He must be saying that we should be grateful to the causal contingency of the natural order for allowing us to exist. But this seems perverse. The natural order is impersonal and uncaring: it just rolls along in accordance with certain causal laws. Why should we feel grateful to it? This same natural order is, after all, responsible for untold human suffering, e.g. suffering from natural disasters, viral infections, cancer and other unpleasantries. These are facets of the natural order that we tend not to accept. In fact, they are things we generally try to overcome. Why should we feel grateful for being plunged into a life filled with suffering of this sort? Couldn’t it be that death is one of the facets of life that we should use our ingenuity to overcome?

Now, I don’t want to be entirely dismissive of this line of argument. Michael Sandel and Michael Hauskeller have tried to articulate a secular, non-religious sense of gratitude that might fit with Dawkins’s argument (though I have my doubts). And I also don’t think that rejecting gratitude should lead us to resentment either. I don’t think resentment toward the natural order is any more appropriate than gratitude. Indeed, I suspect it may even be counter-productive. For example, if we take up the suggestion at the end of the previous paragraph — and think that we should use our ingenuity to overcome death — I suspect we will end up being pretty disappointed. That’s not to say that efforts to achieve life extension are to be rejected. It’s just to say that it’s probably unwise to make them hinge upon which you hang all your hopes and aspirations. I tend to favour a more stoic attitude to the natural order, which involves adjusting one’s hopes and desires so that they are reconciled with the likelihood of death.

I think these criticisms point toward the untenability of Dawkins’s argument — at least insofar as it attempts to console us about our deaths. But for the sake of completeness, let’s also consider the second premise. Is is true to say that we are lucky to be alive? Dawkins probably spends more time addressing this issue in the passage. He uses an argument from genetic luck: the set of possible combinations of DNA is vastly larger than the set of actual people, including you. Your particular combination of DNA is just a tiny, tiny slice of that probability space.

I would be inclined to accept this argument. I don’t doubt that set of possible people is much larger than the set of actual people. The question, of course, is whether all members of that set are equiprobable. Dawkins seems to think that they are. Indeed, he seems to adopt something akin to the principle of indifference when it comes to assessing the probability members of that set. Is this the right principle to adopt? I’m not sure. If one accepts causal determinism, then maybe my existence wasn’t lucky at all: it was causally predetermined by the previous events. It could never have been any other way. Still, I don’t the fact (if it is a fact) of causal determinism affect my probability judgments in relation to other, potentially causally determined, phenomena, like say national or state lotteries. So it probably shouldn’t affect my judgment in this case either.

In other words, I think premise (2) is okay. The real issue is with premise (1) and whether luck entails some change in our attitude toward death. As I said above, I don’t see why this has to be the case.